Natural Language Processing, or NLP, is one of the most complex and innovative advances in artificial intelligence (AI) and search engine algorithms. And, not surprisingly, Google has become a leader in the NLP space. With their 2021 addition of the SMITH algorithm and their previous natural language algorithm, BERT, Google has developed AI that understands human language proficiently. And this technology has the ability to be used in AI-generated content creation.

With exceptional accuracy, Google’s NLP Algorithms have changed the AI game. So, what does this mean for SEO? This article will dive into all the details of Google’s NLP technologies and how you can use them to rank better in search engine results.

What Is Natural Language Processing?

Natural language processing (NLP) is a field of computer science and artificial intelligence involving the study of how to make computers understand human language. Unlike previous forms of AI, NLP uses deep learning.

NLP is considered an important component of artificial intelligence because it enables computers to interact with humans in a way that feels natural.

While NLP may sound like its purpose is to improve Google’s search results and put writers out of business, this technology is used in a wide variety of ways beyond SEO.

Here are the most common:

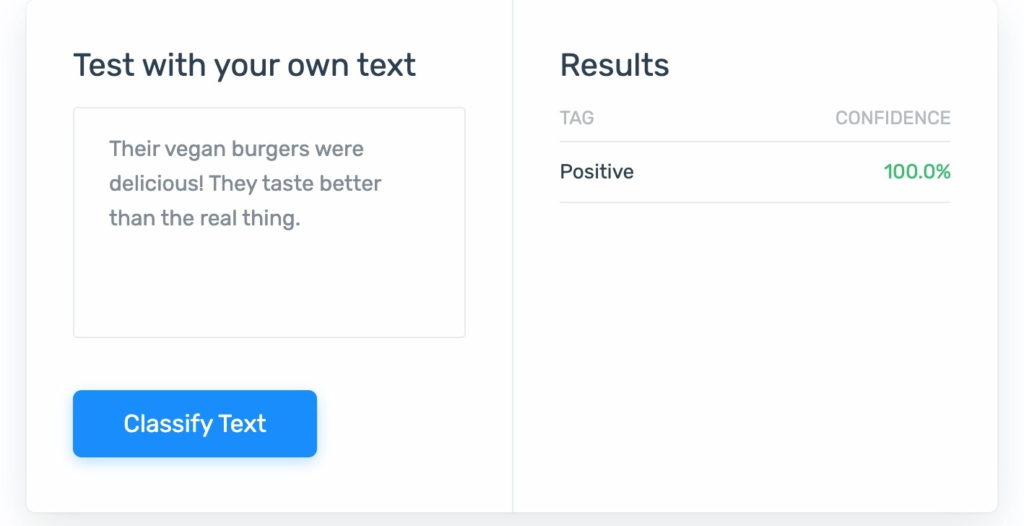

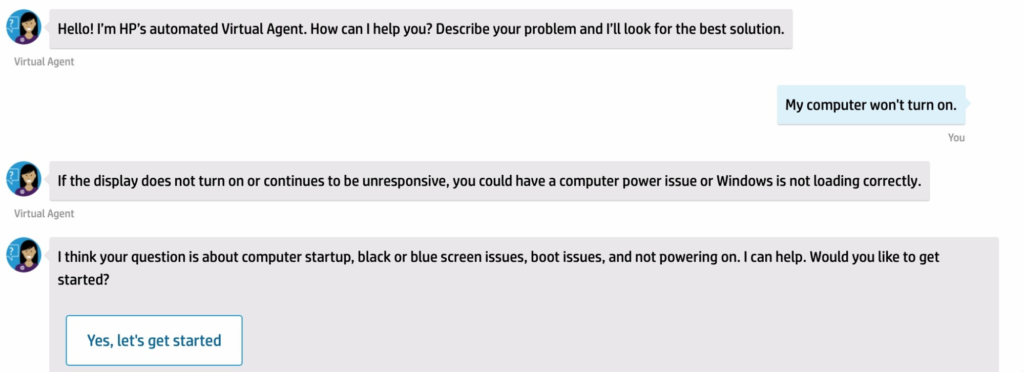

1. Sentiment analysis: NLP that gauges people’s emotional levels to determine things such as customer satisfaction.

2. Chatbots: These are the chat screens that pop up on help pages or general websites. They have a knack for reducing the workload on customer support centers.

4. Speech recognition: This NLP takes audio and translates it into commands and more.

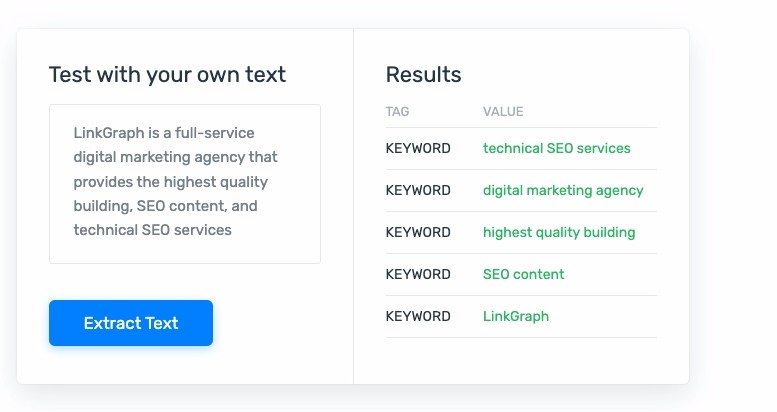

Text classification, extraction, and summarization: These forms of NLP can analyze text then reformat it to be easier for humans to use, analyze, and understand. Text extraction can be quite helpful when it comes to tasks such as medical coding and catching errors in billing.

What Is Deep Learning?

Deep learning is a category of machine learning that is modeled on the neural networks in the human brain. This form of machine learning is often considered more sophisticated than typical AI learning models.

Because they mirror the human brain, they can also mirror human behavior–and learn a lot! Often deep learning algorithms use a two-part system. One system makes predictions while the other refines the results.

Deep learning has been used in household devices, public environments, and the workplace for some time. The most common applications include:

- Self-driving cars

- Voice remotes

- Credit card fraud detection

- Medical devices

- Satellite-based national defense

How Does NLP Affect SEO?

Few updates to Google’s PageRank have disrupted the SEO standards like Natural Language Processing bots. With the rollout of Google’s SMITH, we saw SEO specialists scrambling to understand how the algorithm works as well as how to produce content that meets the algorithm’s standards. However, like most algorithm updates, time often unveils how to meet and exceed content standards to ensure your content has the best chance of making it into the SERPs.

Essentially, NLP helps Google provide searchers with better search results based on their intent and a clearer understanding of a site’s content. This means that only those sites providing the best content held their standings in the SERPs. Furthermore, miscellaneous content that doesn’t provide for a searcher’s intent will get buried on a deeper SERP or not show up at all.

What is Google BERT?

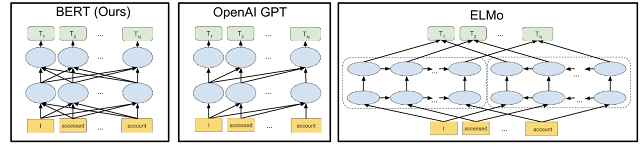

The BERT (Bidirectional Encoder Representations from Transformers) algorithm was rolled out in 2019, and it made waves as the biggest change since PageRank. This algorithm is an NLP that works to understand text in order to provide superior search results.

More specifically, BERT is a neural network that is designed to better understand the context of words in a sentence. The algorithm is able to learn the relationships between words in a sentence by using a technique called pretraining.

The goal of the BERT algorithm is to improve the accuracy of natural language processing tasks, such as machine translation and question answering.

How does the Google BERT algorithm work?

The BERT algorithm is able to achieve its goal by using a technique called transfer learning. Transfer learning is a technique that is used to improve the accuracy of a neural network by using a pre-trained network that is already trained on a large dataset.

Unlike many of Google’s updates, BERT’s inner workings are open-sourced. The BERT algorithm is based on a paper that was published by Google in 2018. This open-source explanation includes that BERT uses a bidirectional contextual model to better understand the meaning of individual words or phrases. The result is finely-tuned content classification.

For example:

If you’re looking for a bar for happy hour versus a bar for your bench press equipment, Google will show you the correct kind of bar based on how the word is used in context within a page.

What Else makes BERT different?

BERT used cloud Tensor Processing Units (TPUs) which accelerated the NLP’s ability to learn from existing samples of text as its pretraining system. Pretraining is a technique that is used to train a neural network on a large dataset before it is used to process data. The pretrained network is then used to process data that is similar to the data that was used to train the network. By using cloud TPUs, BERT was able to process data fast–super fast. And the Google Cloud was also able to be tested.

After millions of training sessions, the BERT algorithm is able to achieve higher accuracy than previous natural language processing algorithms because it is able to better understand the context of words in a sentence.

How many text samples did BERT need? BERT used millions, even billions of samples to fully grasp natural language (not just English).

How Did Google’s Bert Update Affect Websites?

The impact of the BERT update on websites was two-fold. First, the update improved the accuracy of Google’s search results. This meant that websites that were ranked higher in Google’s search results experienced a higher click-through rate (CTR).

Second, the BERT update increased the importance of website content. This means that websites that have high-quality, relevant content are more likely to rank higher in Google’s search results.

What Are Google Bert’s Limitations?

BERT is a powerful tool, but there are some limitations to its capabilities. While it’s easy to get carried away with how neat this NLP model is, it’s important to keep in mind that the BERT model isn’t capable of all human cognitive processes. And these can be limitations in its content understanding capabilities.

BERT Is a Text-Only Algorithm

First, BERT is only effective for natural language processing tasks that involve text. It cannot be used for tasks that involve images or other forms of data. However, keep in mind that BERT can read your alt text which can help you appear in Google image searches.

BERT Doesn’t Understand the ‘Whole Picture’

Second, BERT is not effective for tasks that require an extremely high degree of understanding. Essentially, BERT is a pro at words within sentences, but not capable of understanding entire articles.

For example, BERT can understand that the “bat” in the following sentence is referred to the mammal rather than a wooden baseball bat: The bat devoured the mosquito. But it is not effective for tasks that require the understanding of complex sentences or paragraphs.

What Is the Google SMITH Algorithm?

The Google SMITH (or Siamese Multi-depth Transformer-based Hierarchical) algorithm is a ranking algorithm that was designed by Google engineers. The algorithm looks at natural language, learns the patterns of meaning in relationship to phrases in relation to their distance from one another, and creates a hierarchy of information that allows pages to be indexed more accurately.

This allows SMITH to perform content classification more efficiently.

Another interesting feature of SMITH is that it can function as a text predictor. There are other companies that have been making big waves with NLP (think of Open AI’s infamous GPT-3 beta last year). Some of these technologies could help others build their own search engines.

How Did Google’s SMITH Update Affect Websites?

Google’s SMITH update had a significant impact on websites. The update was designed to improve the accuracy of search results, and it did this by penalizing websites that were using manipulative techniques to influence their rank. designed to target a wide range of manipulative techniques, including spammy links, black hat SEO, and artificial intelligence, SMITH raised the bar for quality content and organic link building.

Some of the most common manipulative techniques that were targeted by SMITH included

- keyword stuffing

- link buying

- excessive use of anchor text.

Websites that were found to be using these techniques were penalized by Google, which resulted in a decrease in their search ranking.

What is the difference between Google’s SMITH update and Google BERT?

Both the BERT model and SMITH model provide Google’s webcrawlers with better language understanding and page indexing. We know that Google already likes long-form content, but when SMITH is live, Google understands longer content even more effectively. SMITH will improve the areas of news recommendations, related article recommendations, and document clustering.

How to Adjust Your SEO Strategy for Google NLP algorithms

While Google claims you cannot optimize for BERT or SMITH, understanding how to optimize for NLP can make an impact on your site’s performance in the SERPs. However, knowing that BERT focuses on providing for user-intent means that you should understand the intent of any search query you want to optimize for.

Google is often a bit cagey about when they rollout their algorithms, and they continue to be secretive about when SMITH will fully roll out. But it’s always best to assume they started to optimize for the change.

SMITH is likely just one of many iterations in Google’s long-term goal of maintaining their dominance in NLP and machine learning technology. As Google improves its understanding of complete documents, good information architecture will be even more important.

How Can You Optimize Your Content for Google’s NLP Algorithms?

- Make sure your content is well-formatted and easy to read. Maintain heading best practices and other readability best practices. These include:

-

-

- Keep your sentences under 20 words

- Use bullet point lists for listed items greater than 2

- Use the correct heading hierarchy

- Avoid presenting readers with an impenetrable block of text

-

- Use clear, concise language that is easy to understand. Don’t overly complicate your sentence structures. By limiting your sentence length, you likely will also streamline your thoughts.

- Avoid using complex or difficult words that may confuse Google’s algorithms. Ditch the thesaurus and keep your sentences straightforward. Keep in mind that the shortest way to something is often the best.

- Use keywords and Focus Terms that are relevant to your topic. Semantically related Focus terms can help Google natural language processors better understand the entirety of your page.

- Make sure your content is fresh and up-to-date. Remember that the motivation of these NLP algorithms is to improve search results while weeding out spammy, outdated content.

- Write interesting and engaging content that people will want to read. You can never go wrong with providing searchers with the best content for their needs. Keep in mind search intent and topical depth.

- Your customer reviews matter. Google’s NLP can likely perform entity sentiment analysis, so don’t ignore bad reviews. If you receive negative reviews (whether they be in the English language or Martian), you can bet that Google’s entity sentiment analysis will push you down the SERPs.

- Provide clear answers to searchers’ questions. If you want to wind up in a featured snippet, Google’s NLPs will only get you there if you through text extraction using entity analysis. This means that Google has the ability to hone in on specific information to display to searchers.

The Future of Google NLPs

Google natural language API and cloud TPU are now available for everyone to use. So, if you could use a deep learning machine learning platform to perform NLP tasks, you can use Google’s natural language APIs. You can even participate in training Google cloud NLPs if you want!

Optimize for Google Natural Language API & Get Results

One thing is clear: natural language APIs are here to stay. As we can see from the progression between the BERT model and SMITH model, Google search algorithms will only continue to understand your content better and better.

Let your mantra remain the same: Focus on content, focus on quality. While SEOs will keep learning and experimenting to find out what works best for Google’s NLP algorithms, always stick to best practices for SEO. Keep in mind that what you write will affect your ranking, but what your customers and visitors write will as well thanks to sentiment analysis. Learn more about the BERT algorithm.

SearchAtlas’s AI Content Generation tool is built on Google’s Natural Language API, so you can produce the highest quality content with reduced effort.

Popular Articles

Want access to the leading SEO software suite on the market?

See why the world's best companies choose LinkGraph to drive leads, traffic and revenue.

“They are dedicated to our success and are a thoughtful sounding board when we run ideas by them - sometimes on ideas and projects that are tangential to our main SEO project with them. Also, I love that they allow for shorter-term contracts compared to the usual 1-year contract with other SEO companies. Lastly, they deliver on their promises.”

Enter your website URL and we’ll give you a personalized step-by-step action plan showing what exactly you need to do to get more traffic.

- Better tools

- Bigger data

- Smarter SEO Insights

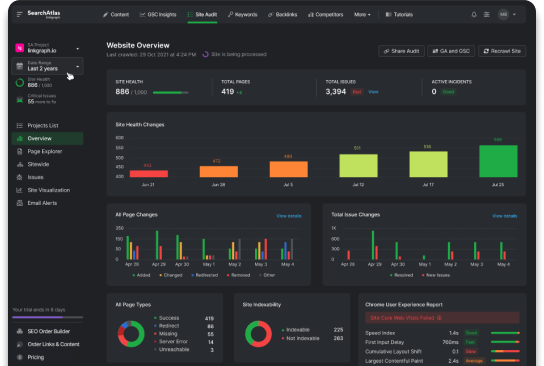

Real-time SEO Auditing & Issue Detection

Get detailed recommendations for on-page, off-site, and technical optimizations.